RPC Redux

Thank you for coming here today, to the first conference dedicated to Finagle This is the written version of a talk I gave at FinagleCon, Aug 13, 2015 in San Francisco, CA. . Thanks to Travis, Chris, and the other organizers. Thank you to all the contributors, early adopters, and other supporters who have helped along the way.

We’ve been using Finagle in production at Twitter for over 4 years now While the first commit was on Oct 18, 2010, it was first used in production early the following year. Interestingly, the first production service using Finagle was Twitter’s web crawler, written in Java. This was done by Raghavendra Prabhu who took the model to heart: the Java code was written in a very functional style, flatMaps abound. . We’ve learned a lot, and have had a chance to explore the design space thoroughly.

While Finagle is at the core of nearly every service at Twitter, the open source community has extended our reach much further than we could have imagined. I think this conference is a testament to that. I’m looking forward to the talks here today, and I’m curious to see how Finagle is used elsewhere. It’s exhilarating to see it being used in ways beyond our own imaginations.

There have been many changes to Finagle along the way, but the core programming model remains unchanged, namely the use of: Futures to structure concurrent code in a safe and composable manner; Services to define remotely invocable application components; and of Filters to implement service-agnostic behavior. This is detailed in ”Your Server as a Function This paper was published at PLOS′13; the slides from the workshop talk are also available. .” I encourage you all to read it.

I believe the success of this model stems from three things: simplicity, composability, and good separation of concerns.

First, each abstraction is reduced to its essence. A Future is just about the simplest way to understand a concurrent computation. A Service is not much more than a function returning a Future. A Filter is essentially a way of decorating a Service. This makes the model easy to understand and intuit. It’s almost always obvious what everything is for, and where some new functionality should belong.

Second, the data structures compose. Futures are combined with others, either serially or concurrently, to form more complex dependency graphs. Services and Filters combine as functions do: Filters may be stacked, and eventually combined with a Service; Services are combined into a pipeline. Compositionality lets us to build complicated structures from simpler parts. It means that we can write, reason about, and test each part in isolation, and then put them together as required.

Finally, the abstractions promote good separation of concerns. Concurrency concerns are separated from how application components are defined. Behavior (filters) is separated from domain logic (services). This is more than skin deep: Futures encourage the programmer to structure her application as a set of computations that are related by data dependencies–a data flow. This divorces the semantics of a computation, which are defined by the programmer, from its execution details, which are handled by Finagle.

In some sense, and certainly in retrospect, this design–Futures, Services, Filters–feels inevitable. I don’t think it’s possible to have any of these properties in isolation. They are a package deal. It’s difficult to imagine good compositionality without also having separation of concerns. Composition is quickly defeated by contending needs. For example, we can readily compose Services and Filters because Futures allow them to act like regular functions. If execution semantics were tied into the definiton of a Service, it’s difficult to imagine simple composition.

Where RPC breaks down

The simplicity and clarity of Your Server As a Function belies the true nature of writing and operating a distributed RPC-based system. Using Finagle in practice requires much of developers and operators both–most serious deployment of Finagle requires users to become expert in its internals: Finagle must be configured, statistics must be monitored, and applications must be profiled so that we stand a chance of understanding it when it misbehaves.

Let’s consider two illustrative examples where this simplicity breaking down: creating resilient systems, and creating flexible systems.

Example 1, resilient systems

This quality of being able to store strain energy and deflect elastically under a load without breaking is called ‘resilience’, and it is a very valuable characteristic in a structure. Resilience may be defined as ‘the amount of strain energy which can be stored in a structure without causing permanent damage to it’.

A resilient software system is one which can withstand strain in the form of failure, overload, and varying operating conditions.

Resilience is an imperative in 2015: our software runs on the truly dismal computers we call datacenters. Besides being heinously complex–and we all know complexity is the enemy of robustness–they are unreliable and prone to operator error. Datacenters are, however, the only computers we know to build cheaply and at scale. We have no choice but to build into our software the resiliency forfeited to our hardware.

That I called it a resilient system is no mistake. Of the problems we encounter on a daily basis, resiliency is perhaps the most demanding of our capacity for systems thinking. An individual process, server, or even a datacenter is not by itself resilient. The system must be.

Thus it’s no surprise that resilient systems are created by adopting a resiliency mindset. It’s not sufficient to implement resilient behavior at a single point in an application; it must be everywhere considered.

Finagle, occupying the boundary between independently failing servers, supplies many important tools for creating resilient systems. Among these are: timeouts, failure detection, load balancing, queue limits, request rejection, and retries. We piece these together just so to create resilient and well-behaved software. (Of course, this covers only some aspects of resilience. Many tools and strategies are not available to Finagle because it is does not have access to application semantics. For example, Finagle cannot tell whether a certain computation is semantically optional from an application’s point of view.)

Here’s an incomplete picture of what it’s like to create a resilient server with Finagle today.

Timeouts. You use server timeouts to limit the amount of time spent on any individual request. Client timeouts are used maximize your chances of success while not getting bogged down by slow or unresponsive servers. At the same time, you want to leave some room for requests to be retried when they can. These timeouts also have to consider application requirements. (Perhaps a request is not usefully served if the user is unwilling to wait for its response.)

Retries. You tune your retries so that you have a chance of retrying failed or timed out operations (when this can be done safely). At the same time, you want to make sure that retries will not exhaust your server’s timeout budget, and that you won’t amplify failures and overwhelm downstream systems that are already faltering.

Queue sizes, connection pools. You want to tune inbound queue sizes so that the server cannot be bogged down by handling too many requests at the same time. Perhaps you use Iago to run what you believe are representative load tests, so that you understand your server’s load response and tune queue sizes accordingly. Similarly, you configure your client’s connection pool sizes to balance resource usage, load distribution, and concurrency. You also account for your server’s fan out factor, so that you won’t overload your downstream servers.

Failure handling. You need to figure out how to respond to which failures. You may choose to retry some (with a fixed max number of retries), you might make use of alternate backends for other; still others might warrant downgraded results.

Many remarkably resilient servers are constructed this way. It’s a lot of work, and a lot of upkeep: the operating environment, which includes upstream clients and downstream servers, changes all the time. You have to remain vigilant indeed so that your system does not regress. Luckily we have many tools to make sure our servers don’t regress, but even these can test only so much. The burden on developers and operators remains considerable.

It’s noteworthy that a great number of these parameters are intertwined in inextricable ways. If a server’s request timeouts aren’t harmonized with its clients request timeouts, their pool sizes, and concurrency levels, you’re unlikely to arrive at your desired behavior. When these parameters fall out of lockstep–and this is exceedingly easy to do!–you may compromise the resiliency of your system. Indeed a good number of issues we have had in production are related to this very issue.

More later.

Example 2, flexible systems

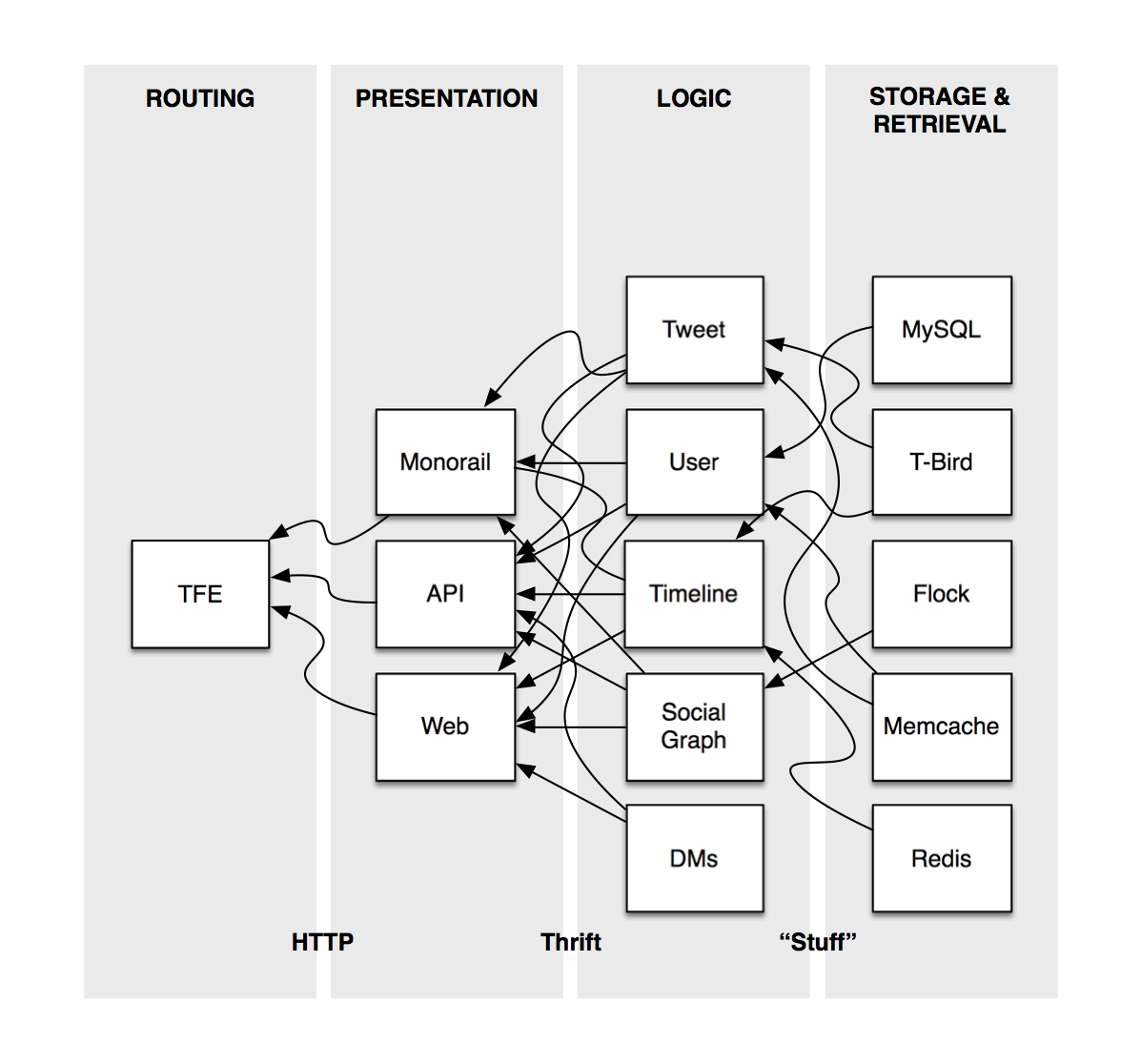

Consider a modern Service Oriented Architecture. This is Twitter’s Note that this is highly simplified, showing only the general outline and layering of the Twitter’s architecture. .

Many things that are quite simple in a monolithic application become quite complicated when its modules are distributed as above.

Consider production testing a new version of a service. In a monolithic application, this is a simple task: you might check out the branch you’re working on, compile the application, and test it wholesale. In order to deploy that change, you might deploy that binary on a small number of machines, make sure they look good, and then roll it out to a wider set of machines.

In a service architecture, it is much more complicated. You could deploy a single instance of the service you have changed, but then you’d need to coordinate with every upstream layer in order to redirect requests to your test service.

It gets worse when changes need to be coordinated across modules, increasing again the burden of coordination. For example, your change might require an upstream service to change, perhaps because results need to be interpreted differently.

What about inter-module debugging? Again, in monolithic systems, our normal toolset applies readily: debuggers, printf, logging, Dtrace, and heapster help greatly.

We also have problems that do not have ready analogs in monolithic applications. For example, how do we balance traffic across alternative deployments of a service?

Decomposing RPC

We’ve been implicitly operating with a very simple model of RPC, namely: the role of an RPC system is to link up two services so that they a client may dispatch requests to a server. Put another way, the role of Finagle is to export service implementations from a server, and to import service implementations from remote servers.

In practice, Finagle does much more. Each Service is really a replica set, and this requires load balancing, connection pooling, failure detection, timeouts, and retries in order to work properly. And of course Finagle also implements service statistics, tracing, and other sine qua nons. But this is only to fulfill the above, basic model: to export and to import services.

In many ways, this is the least we can get away with, but, as we have seen, service architectures in practice end up being quite complicated: they are complicated to implement; they are unwieldy to develop for and to operate. The RPC model doesn’t provide direct solutions to these kinds of problems.

I think this calls for thinking about how we might elevate the RPC model to account for this reality.

A service model

Why should we solve these problems in the RPC layer?

A common approach is to introduce indirection at the infrastructure level. You might use software load balancers to redirect traffic where appropriate, or to act as middleware to handle resiliency concerns. You might deploy specialized staging frontends, so that new services can be deployed in the context of an existing production system.

This approach is problematic for several reasons.

—They are almost always narrow in scope, and difficult to generalize. For example, a staging setup might work well for frontends that are directly behind a web server, but what if you want to stage deeper down the service stack?

—They also tend to operate below the session layer, and don’t have access to useful application level data such as timeouts, or whether an operation is idempotent.

—They constitute more infrastructure. More complexity. More components that can fail.

Further, as we have already seen, many RPC concerns are inextricably entwined with those of resiliency and flexibility. It seems prudent to consider them together.

I think what we need is a coherent model that accounts for resiliency and flexibility. This will give us the ability to create common infrastructure that can be combined freely to accomplish our goals. A good model will be simple, give back freedom to the implementors, disentangle application logic from behavior from configuration.

I think it’s useful to break our service model down into three building blocks: a component model, a linker, and an interaction model.

A component model defines how systems components are defined and structured. A good component model is general–we cannot presuppose what users want to write–but must also afford the underlying implementation a great deal of freedom. Further, a component model should not significantly limit how components are structured, how they are pieced together; it should account for both applications as well as behavior.

A linker is responsible for piecing components together, for distributing traffic. It should do so in a way that allows flexibility.

An interaction model tells how components communicate with each other. It governs how failures are interpreted, how to safely retry operations, and how to degrade service when needed. A good interaction model is essential for creating truly a resilient system.

You may have noticed that these play the part of a module system (component model, linker) and a runtime (interaction model).

We’ve been busy moving Finagle towards this model, to solve the very problems outlined here. Some of it has already been implemented, and is even in production; much of it is ongoing work.

Component model

Finagle already has a good, proven component model: Futures, Services, and Filters.

While there isn’t much that I would change here, though one common issue is that it lacks a uniform way of specifying and intepreting failures.

By way of Futures, Services can return any Throwable as an error. This “open universe” approach to error handling is problematic because we cannot assign semantics to errors in a standard way.

We recently introduced Failure as a standard error value in Finagle.

final class Failure private[finagle]( private[finagle] val why: String, val cause: Option[Throwable] = None, val flags: Long = 0L, protected val sources: Map[Failure.Source.Value, Object] = Map.empty, val stacktrace: Array[StackTraceElement] = Failure.NoStacktrace, val logLevel: Level = Level.WARNING ) extends Exception(why, cause.getOrElse(null))

Failures are flagged. Flags represent semantically crisp and actionable properties of the failure, such as whether it is restartable, or if it resulted from an operation being interrupted. With flags, server code can express the kind of a failure, while client code can interpret failures in a uniform way, for example to determine whether a particular failure is retryable.

Failures also include sourcing information, so that we have a definitive way to tell where a failure originated, remotely or locally.

Linker

Finagle clients are usually created by providing a static host list or a dynamic one using ServerSet A ServerSet is a dynamic host list stored in ZooKeeper. It is updated whenever nodes register, deregister, or fail to maintain a ZooKeeper heartbeat. .

While ServerSets are useful–they allow servers to come and go without causing operational hiccups–they are also limited in their power and flexibility.

We have worked to introduce a symbolic naming system into Finagle. This amounts to a kind of flexible RPC routing system. It uses symbolic addresses as destinations, and allows the application to alter its interpretation as appropriate. We call it Wily.

Wily is an RPC routing system that works by dispatching payloads to hierarchically-named logical destinations. Wily uses namespaces to affect routing on a per-transaction basis, allowing applications to modify behavior by changing the interpretation of names without altering their syntax.

To explain how it works, let’s start with how the various concepts involved are represented in Finagle.

Finagle is careful to define interfaces around linking, separating the symbolic, or abstract, notion of a name from how it is interpreted.

In Finagle, addresses specify physical, or concrete, destinations. Addresses, by way of SocketAddress, tell how to connect to other components. Since Finagle is inherently “replica-oriented”, where every destination is a set of functionally identical replicas of a component, a fully bound address is represented by a set of SocketAddress.

sealed trait Addr case class Bound(addrs: Set[SocketAddress]) extends Addr case class Failed(cause: Throwable) extends Addr object Pending extends Addr object Neg extends Addr

Addresses can occupy other states as well, to account for failure (Failed), pending resolution (Pending), or nonexistence (Neg).

Whereas physical addresses represent concrete endpoints (a set of IP addresses), a name is symbolic, and may be purely abstract. To account for this, Name has two variants,

sealed trait Name case class Path(path: finagle.Path) extends Name case class Bound(addr: Var[Addr]) extends Name

The first, Path, describes an abstract name via a path. Paths are rather like filesystem paths: they describe a path in a hierarchy. For example, the path /s/user/main might name the main user service. Note that paths are abstract: the name /s/user/main names only a logical entity, and gives the implementation full freedom to interpret it. More on this later.

The second variant of Name represents a destination that is bound. That is, an address is available. Note here that distribution rears its ugly head: any naming mechanism must account for variability. The address (replica set) is going to change with time, and so it is not sufficient to work with a single, static Addr. Rather, we make use of a Var Vars provide a way to do “self adjusting computation”, making it simple compose changeable entities. to model a variable, or changeable, value. Thus anything that deals with names must account for variability. For example, Finagle’s load balancer is dynamic, and is able to add and remove endpoints from consideration as a bound name changes.

Users of Finagle rarely encounter Name directly. Rather, Finagle will parse strings into names, through its resolver mechanism.

With the help of the resolver we write:

// A static host set.

Http.newClient("host1:8080,host2:8080,host3:8080")

// A (dynamic) endpoint set derived from the

// ServerSet at the given ZK path.

Http.newClient("zk!myzkhost:2181!/my/zk/path")

// A purely symbolic name.

// It must be bound by an interpreter.

Http.newClient("/s/web/frontend")

And of these, only the last results in a Name.Path.

Interpretation

Interpretation is the process of turning a Path into a Bound. Finagle uses a set of rewriting rules to do this. These rules are called delegations, and are written in a dtab, short for delegation table. Delegations are written as

src => dest

for example

/s => /zk/zk.local.twitter.com:2181

A path matches a rule if its prefix matches the source; rules are applied by replacing that prefix with the destination.

In the above example, the path /s/user/main would be rewritten to /zk/zk.local.twitter.com:2181/user/main.

Rewriting isn’t by itself very interesting; we must be able to terminate! And remember our goal is to turn a path into a bound. For this, we have a special namespace of paths, /$. Paths that begin with $ access implementations that are allowed to interpret names. These are called Namers. For example, to access the serverset namer, we make use of the path

/$/com.twitter.serverset

the serverset namer interprets its residual path (everything after /$/com.twitter.serverset). For example, the path

/$/com.twitter.serverset/sdzookeeper.local.twitter.com:2181/twitter/service/cuckoo/prod/read

names the serverset at the ZooKeeper path

/twitter/service/cuckoo/prod/read

on the ZooKeeper ensemble named

sdzookeeper.local.twitter.com:2181

Namers can either recurse (with a new path, which in turn is interpreted by the dtab), or else terminate (with a bound name, or a negative result). In the example above, the namer com.twitter.serverset makes use of Finagle’s serverset implementation to return a bound name with the contents of the serverset named by the given path.

Rules are evaluated together in a delegation table, and apply bottom-up–the last matching rule wins. Rewriting is a recursive process: it continues until no more rules apply. Evaluating the name /s/user/main in the delegation table

/zk => /$/com.twitter.serverset /srv => /zk/remote /srv => /zk/local /s => /srv/prod

yields the following rewrites:

/s/user/main

/srv/prod/user/main

/zk/local/prod/user/main

*/$/com.twitter.serverset/local/prod/user/main

(* denotes termination: here the Namer returned a Bound.)

When a Namer returns a negative result, the interpreter backtracks. If, in the previous example, /$/com.twitter.serverset/local/prod/user/main yielded a negative result, the interpreter would backtrack to attempt the next definition of /srv, namely:

/s/user/main [1] /srv/prod/user/main [2] /zk/local/prod/user/main [3] /$/com.twitter.serverset/local/prod/user/main [neg] /s/user/main [2] /zk/remote/prod/user/main [3] /$/com.twitter.serverset/remote/prod/user/main

Thus we see that this Dtab represents the environment where we first look up a name in the local serverset, and if it doesn’t exist there, the remote serverset is attempted instead.

The resulting semantics are similar to having several namespace overlays: a name is looked up in each namespace, in the order of their layering.

This arrangement allows for flexible linking. For example, I can use one dtab in a production environment, perhaps even one for each datacenter; other dtabs can be used for development, staging, and testing.

Symbolic naming provides a form of location transparency: the user’s code only specifies what is to be linked, not how (where). This gives the runtime freedom to interpret symbolic names however is appropriate for the given environmen; location specific knowledge is encoded in the dtabs we’re operating with.

Wily takes this idea even further: Dtabs are themselves scoped to a single request; they are not global to the process or fixed for its lifetime. Thus we have a base dtab that is amended as necessary to account for behavior that is desired when processing a single request. To see why this is useful, consider the case of staging servers. We might want to start a few instances of a new version of a server to test that it is functioning properly, or even for development or testing purposes. We might want to send a subset of requests (for example, those requests originated by the developers, or by testers) to the staged server, but leave the rest untouched. This is easy with per-request dtabs: we can simply amend an entry for selected requests, for example:

/s/user/main => /s/user/staged

which would now interpret any request destined for /s/users/main to be dispatched to /s/user/staged.

Finally, per-request dtabs are transmitted across component boundaries, so that these rewrite rules apply for the entire request tree. This allows us to amend the dtab in a central place, while ensuring it is interpreted uniformly across the entire request tree. This required us to modify our RPC protocol, Mux. We also have support in Finagle’s HTTP implementation.

This has turned out to be quite a flexible mechanism. Among other things, we have used it for:

Environment definition. As outlined above, we make use of dtabs to define a system’s environment, allowing us to deploy it in multiple environments without changing code or maintain specialized configuration.

Load balancing. We’ve also made use of dtabs to direct traffic across datacenters. The mechanism allows our frontend web servers to maintain a smooth, uniform way of naming destinations. The interpretation layer incorporates rules and also manual overrides to dynamically change the interpretation of these names.

Staging and experiments. As described above, we can change how names are interpreted on a per request basis. This allows us to implement staging and experiment environments in a uniform way with no specialized infrastructure. A developer simply has to instantiate her service (using Aurora), and instruct our frontend web servers, through the use of specialized http headers, to direct some amount of traffic to her server.

Dynamic instrumentation. At a recent hack week, Oliver Gould Antoine Tollenaere, and myself made use of this mechanism to implement Dtrace-like instrumentation for distributed systems. A small DSL allowed the developer to sample traffic (based on paths and RPC methods), and process the selected traffic. Using this system, we could introspect the system in myriad ways. For example, we could easily print the payloads between every server involved in satisfying a request. We could also modify the system’s behavior. For example, we could test hypothesis about the system’s behavior under certain failure or slow-down conditions.

Interaction model

The final building block we need is an interaction model. Our interaction model should govern how components talk to each other. What does this mean?

Today, Finagle has only a weakly defined That is not to say it isn’t there–it very much is!–rather, much of it is implicit. interaction model, but it’s probably more than 50% of the core implementation! These components include load balancing, queue limits, timeouts and retry logic, and how errors are interpreted. They are a ready source of configuration knobs, many of which must be tuned well for good results.

For example, consider the problem of making sure that a service is simultaneously: well conditioned, so that it prefers to reject some requests instead of degrading service for all; timely, so that users aren’t left waiting; and well behaved You could also say that a service should respond to backpressure. , so that it doesn’t overwhelm downstream resources with requests they cannot handle, and so that it cannot overwhelm itself and get into a situation from which it cannot recover.

Tuning a system to accomplish these goals in concert requires a fairly elaborate exercise. At Twitter, we often end up running load tests to determine this, or use parameters derived from production instances.

This arrangement is problematic for several reasons.

First, the mechanisms we employ–timeouts, retry logic, queueing policies, etc.–serve multiple and often conflicting goals: resource management, liveness, and SLA maintenance. Should we tolerate a higher downstream error rate? Increase the retry budget. But then this might increase our concurrency level! Create bigger connection pools. But some of these may be created to handle only temporary spikes, which could affect the rate of promoted objects. Lower the connection TTL! And so on. Without a complete understanding of the system, it is difficult to even anticipate the net effect of changing an individual parameter.

Second, such configuration is inherently static, and fails to respond to varying operating conditions and application changes over time.

Last and also more troubling, is the fact this arrangement severely compromises abstraction and thus modularity: the configuration of clients, based on a set of low level parameters and their assumed interactions, preclude many changes to Finagle. Systems have come to rely on implementation details–and also the interaction of different implementation details!–that are specified not at all.

We have tied the hands of the implementors.

Introducing goals

We can usually formulate high-level goals for the behavior that we’re configuring with low-level knobs. (And if not: how do you know what you’re configuring for?)

I think our goals usually boil down to two. Service Level Objectives, or SLOs, tell how what response times are acceptable. Cost objectives, or COs, tell how much you’re willing to pay for it.

Perhaps the simplest SLO is a deadline. That is, the time after which a response is no longer useful. A simple cost objective is a ceiling for a services fan out — that is, what is an acceptable ratio of inbound to outbound requests over some time window.

The implications of such a seemingly simple change in perspective are quite profound. Suddenly, we have an understanding, on the semantic level, what the objectives are while servicing a request. This allows us to introduce dynamic behavior. For example, with an SLO, an admission controller can treat a service as a black box, observing its runtime latency fingerprint. The controller can reject requests that are unlikely to be servicable in a timely manner.

Service Level Objectives can also cross service boundaries with a little help from the RPC protocol. For example, Mux allows the caller to pass a deadline to the callee. Http and other extensible protocols may pass these via standard headers.

This is still an active area of ongoing work, but I’ll show two simple examples where this is working today.

Deadlines

Deadlines are represented in Finagle with a simple case class

case class Deadline(timestamp: Time, deadline: Time) extends Ordered[Deadline] {

def compare(that: Deadline): Int = this.deadline.compare(that.deadline)

def expired: Boolean = Time.now > deadline

def remaining: Duration = deadline-Time.now

}

The current deadline is defined in a request context, and may be set and accessed any time. The context is serialized across service boundaries, so that deadlines set in a client are available from the callee-server as well.

We can now define a filter that rejects requests that are expired.

class RejectExpiredRequests[Req, Rep]

extends SimpleFilter[Req, Rep] {

def apply(req: Req, service: Service[Req, Rep]): =

Contexts.broadcast.get(Deadline) match {

case Some(d) if d.expired =>

Future.exception(Failure.rejected)

case _ => service(req)

}

}

Even this simple filter is useful. It implements a form of load conditioning. Consider for example servers that are just starting up, or have just undergone garbage collection. These are likely to experience large delays in request queue processing. This filter makes sure these requests are rejected by the server before spending computational resources needlessly. We choosing to reject useless work instead of bogging the server down further. This is all possible because we have an actual definition of what “useless work” is.

Even in this simple form, we could go further. For example, we might choose to interrupt a future after the request’s deadline has expired, so that we can terminate processing, and again avoid wasting resources on needless computation.

Ruben Oanta and Bing Wei are working on more sophisticated admissions controllers that should be able to also account for observed latencies, so that we can reject requests even earlier.

Fan out factor

We have implemented a fan out factor as a simple cost objective. The fan describes an acceptable ratio of requests (from client code) to issues (Finagle’s attempts to satisfy those requests, which includes retries). For example, a factor of 1.2 means that Finagle will not increase downstream load by more than 20% over nominal load.

The implementation smooths this budget over a window, so as to tolerate and smooth out spikes. Conversely, we don’t want to create spikes: the window makes sure that you can’t build up a large reservoir only to expell it all at once.

Again this presents a way to specify a high level goal, and let Finagle attempt to satisfy it for you. The developer is freed from reasoning about fixed retry budgets, trading off the need to tolerate transient failures, while at the same time avoiding load amplification by way of retries. Instead a single number tells it all.

Conclusion

We’re moving towards a model of RPC that operates at a higher level of abstraction. Instead of treating RPC as a simple remote dispatch primitive, we have introduced high-level targets: symbolic names, service level objectives, and cost objectives.

Symbolic naming gives us abstract destinations that whose concrete destinatons can be evaluated by the operating environment. They decouple the abstract notion of a destination from their physical interpretation.

Service and cost objectives remove the dependencies from services on low level configuration, and implementation details, and instead gives Finagle a great deal of freedom to implement better strategies.

We have untied the hands of the implementors.

Thank you for coming out today, and enjoy the rest of the conference!